Revolutionizing Large Language Models with Quantum Machine Learning

October 25, 2023

Introduction:

The emergence of large language models has ushered in a new era of artificial intelligence, enabling more natural and coherent communication between machines and humans. However, despite their remarkable capabilities, these models still grapple with limitations when it comes to generating original content and completing sentences. Enter quantum machine learning, specifically Grover’s algorithm, which has the potential to address these issues. In this blog post, we will explore the challenges faced by today’s generative AI language models and how Grover’s algorithm can pave the way for a revolution in natural language processing.

Challenges Faced by Today’s Generative AI Large Language Models:

Traditional machine learning methods, relying on probabilities and statistical inference, fall short when handling intricate, open-ended tasks such as language generation. Large language models today encounter several hurdles in producing creative, diverse content or finishing incomplete sentences:

- Lack of Diversity: Classical machine learning models tend to produce repetitive, formulaic output that lacks diversity and originality. This is because they rely on statistical patterns in the training data, which can result in the same combinations of words being used repeatedly.

- Limited Vocabulary: Even the largest language models have a finite vocabulary, which limits their ability to generate novel responses or complete incomplete sentences. This can lead to a lack of nuance and precision in their outputs.

- Difficulty with Ambiguity: Natural language is inherently ambiguous, with words and phrases having multiple meanings and contexts. Classical machine learning models struggle to capture this complexity, leading to confusion and errors.

- Inability to Handle Unexpected Inputs: Classical models are designed to handle predefined input formats and fail when encountering unexpected or out-of-distribution inputs. This can result in nonsensical or inappropriate responses when the model encounters something it hasn’t seen before.

The Quantum Machine Learning (QML) Approach using Grover Algorithm:

Grover’s algorithm offers a unique solution to these challenges by leveraging the principles of quantum mechanics to perform a search or predict the next word in a sentence. Here’s how it operates:

- Quantum Parallelism: Grover’s algorithm exploits the principle of quantum parallelism to simultaneously explore all possible solutions to a problem. In the case of language generation, this means that the algorithm can consider every possible word in a vocabulary simultaneously, rather than sequentially traversing a list of options. This allows it to generate more diverse and creative responses.

- Quantum Interference: By manipulating the phase of quantum states, Grover’s algorithm can selectively enhance or suppress certain possibilities, allowing it to home in on the most appropriate response. This enables the algorithm to capture subtle nuances in meaning and context that would be difficult or impossible for classical models to detect.

- Quantum Entanglement: Grover’s algorithm can also take advantage of quantum entanglement to create a shared understanding across different parts of the input sequence. This allows it to capture long-range dependencies and better understand the relationships between different words in a sentence.

- Robustness to Noise: Quantum machine learning algorithms like Grover’s are naturally robust to noisy or missing data, thanks to their ability to operate on quantum states that represent multiple possibilities simultaneously. This makes them well-suited for handling unexpected inputs or incomplete sentences.

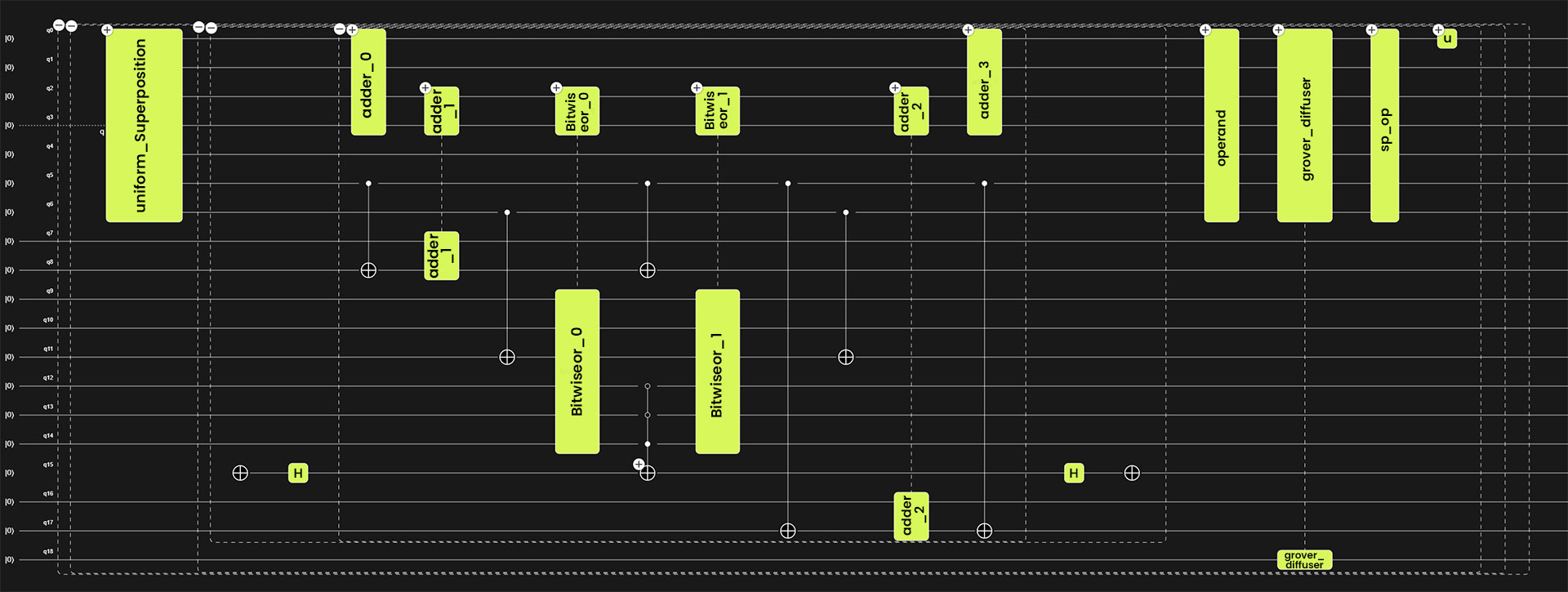

Figure 1. 4-qubit state Grover Algorithm circuit diagram.

The above Figure 1. is a 4-qubit state quantum search circuit diagram which utilizes the Grover algorithm between the quantum encoder and decoder, to identify the next word and predict the target word in a sentence when a user ask a question. We will discuss in-depth the circuit diagram more in our whitepaper.

Advantages of Grover’s Algorithm:

Quantum machine learning, with Grover’s algorithm at its forefront, holds immense promise for the future of language models and natural language processing. The advantages it brings are not limited to the challenges mentioned earlier; they extend to various other aspects of language understanding and generation.

- Improved Diversity and Creativity: Grover’s algorithm can generate more diverse and creative responses by exploring all possible solutions simultaneously. This can lead to more interesting and engaging interactions with humans.

- Enhanced Contextual Understanding: By capturing subtle nuances in meaning and context, Grover’s algorithm can better understand the relationships between different words in a sentence. This can result in more accurate and informative responses.

- Ability to Handle Unexpected Inputs: Grover’s algorithm can handle unexpected inputs with ease, thanks to its ability to operate on quantum states that represent multiple possibilities simultaneously. This can lead to more reliable and relevant responses in unpredictable situations.

- Efficient Training: Grover’s algorithm can efficiently train on large datasets by exploiting the principles of quantum parallelism. This can reduce the time and resources required for training, making it more practical for real-world applications.

Challenges on the Path to Quantum Language Models:

As promising as quantum machine learning is for language models, several challenges must be addressed to realize its full potential:

Scaling Complexity: Applying Grover’s algorithm to increasingly complex language models and datasets requires significant computational resources. Researchers are actively exploring methods to scale quantum models efficiently.

Hardware Limitations: Quantum hardware is still in its infancy, with limited qubits and noisy operations. Achieving practical quantum advantage for language models necessitates advancements in quantum hardware.

Algorithm Optimization: Fine-tuning and optimizing Grover’s algorithm for specific language tasks remain areas of active research, as its full potential in diverse language applications is yet to be realized.

Interdisciplinary Collaboration: Effective integration of quantum computing into the field of natural language processing requires collaboration between quantum physicists and language model experts, bridging the gap between two distinct domains.

Conclusion:

In conclusion, Grover’s algorithm possesses the potential to reshape the landscape of natural language processing by presenting a fundamentally new approach to language generation tasks. By harnessing the power of quantum computing, Grover’s algorithm can produce more diverse and creative responses, comprehend context and meaning with greater depth, and adapt to unforeseen inputs seamlessly.

The implications of this technology are profound. Picture a virtual assistant capable of conversing with humans in a manner indistinguishable from a human. Imagine generating creative writing, such as poetry or short stories, with an AI system suggesting coherent and innovative sentences and paragraphs.

Certainly, there are challenges ahead before we fully harness Grover’s algorithm’s potential. Scaling the algorithm to larger datasets and more complex tasks remains a concern, as does the need for a deeper understanding of its underlying physics to optimize performance and ensure reliability. Quantum machine learning stands at the threshold of transforming language models into truly intelligent and adaptable conversation partners, revolutionizing the way we interact with AI.