Abstract

Overview

Foreword

Artificial intelligence technology is utilized in diverse areas, such as entertainment, shopping, predictive insurance claims, and autonomous driving. However, it is becoming increasingly evident that artificial intelligence (AI) may have potential drawbacks as it becomes more involved in decision-making.

To ensure that AI does not perpetuate racism and bias, it is vital to carefully examine AI models, minimize data risks, and take steps to avoid discrimination against minority communities.

In this study, we delve into the integration of ethical principles in developing AI products, data standards, data security, and fairness assessments of AI models. We aim to provide chief experience officers (CXOs) with knowledge and skills to avoid penalties for unethical AI and data practices while promoting trust and transparency when implementing AI solutions.

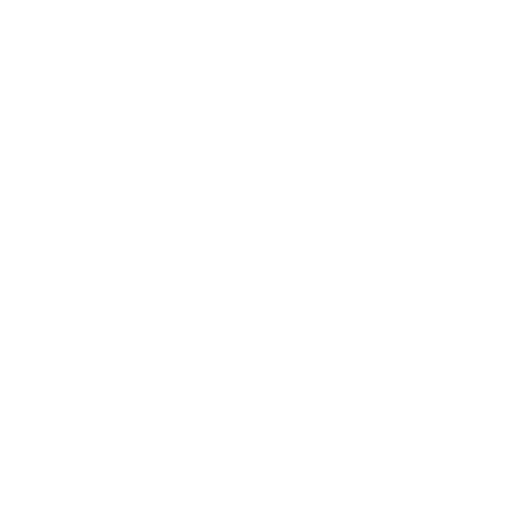

The Prevalence of AI

In 2023, there were significant advancements in artificial intelligence, and OpenAI’s ChatGPT stands out as a prime example. ChatGPT was trained with twenty billion parameters, enabling it to oversee intricate natural language processing tasks and provide precise answers. Businesses can benefit significantly from AI technology, which can help predict outcomes, optimize operations, automate tasks, increase efficiency, reduce waste, and discover new opportunities. Artificial intelligence has also become integrated into everyday routines through features such as personalized voice assistants, Netflix recommendations, and smart homes. The AI market is projected to grow at a compound annual growth rate (CAGR) of 38.0% from 2021 to 2030 due to the growth in organizations’ AI budgets and advances in cloud infrastructure at a low cost.

While AI is helpful, it is fallible and may occasionally malfunction, leading to severe consequences like legal issues, fines, and loss of trust among users toward organizations.

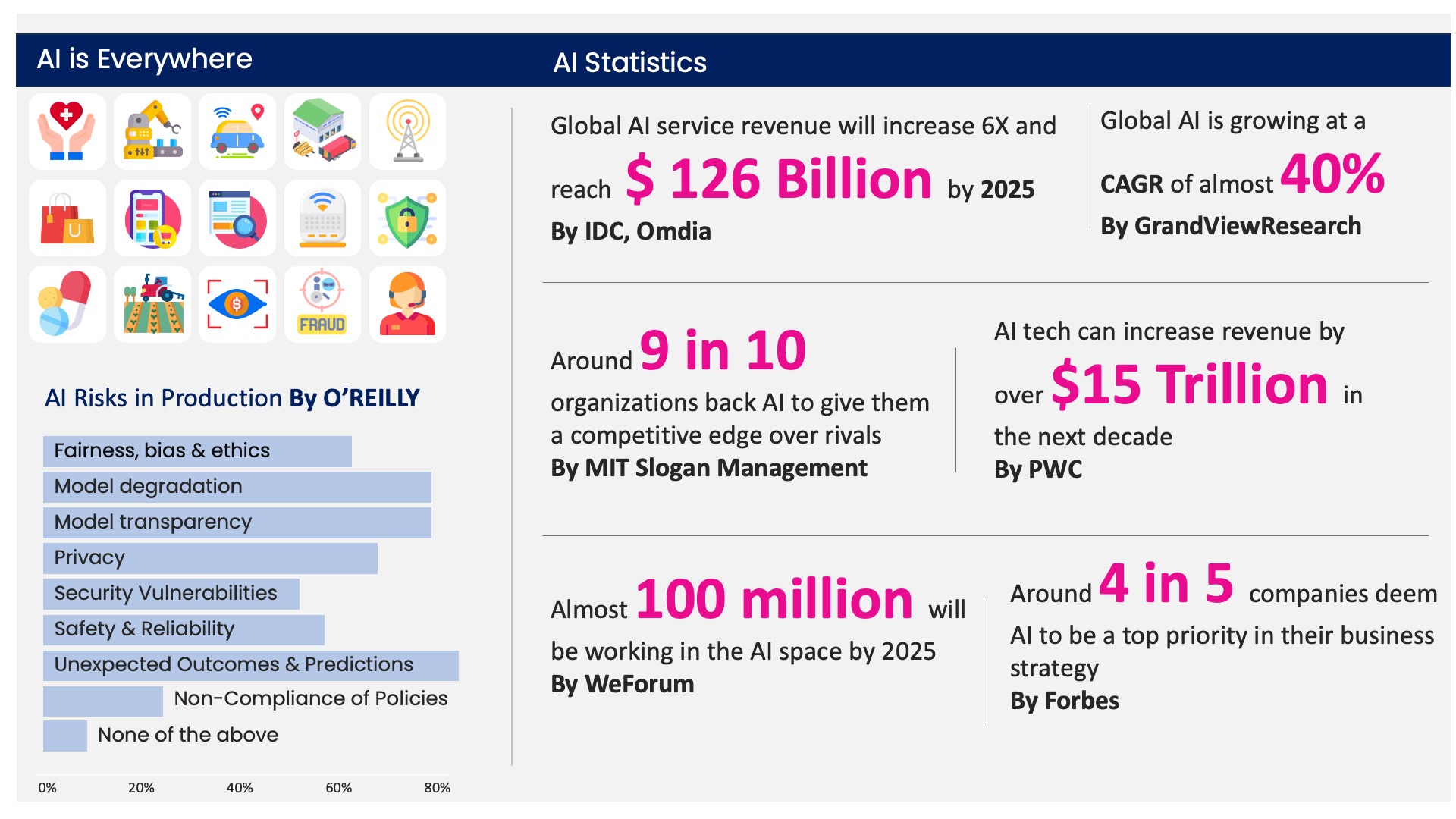

Examples of AI Gone Wrong

Although AI has numerous benefits in improving productivity and creating innovative products and services, its use raises ethical, governance, and legal concerns. There is a risk of biased outcomes, incorrect applications, the mishandling of confidential information, and a lack of accountability and transparency in certain situations.

Occasionally, AI has not met expectations, causing ethical concerns that can harm a company’s reputation and have far-reaching consequences for society, finance, law, politics, security, and privacy.

The Challenge

Ethical Dilemmas in AI

Top 10 AI Ethical Dilemma Questions:

From users, industries, governments, and AI creators

- What problem is your AI solution designed to solve?

- What datasets train your AI models?

- Is there a way to ensure the datasets are contamination-free?

- Does your AI solution access sensitive or personal data without the user’s or industry’s consent?

- What security measures have you implemented throughout the AI lifecycle to prevent data loss or tampering?

- How does your model make decisions, and what are the underlying assumptions, biases, or discriminatory decisions?

- Are your AI models explainable, justifiable, and well-documented with data and model versions for auditing?

- Have you involved a human expert in evaluating your AI solution, and how do you guarantee that the expert report has not been compromised?

- Can you explain the key parameters that led to your solution’s market approval?

- How does your AI solution impact people negatively, and what are its benefits?

The impact of AI on the digital world has been significant, particularly in areas such as healthcare, fraud detection, search results, ads, and news feeds. However, it is crucial to prevent biases and discrimination, so governments have implemented AI ethics policies.

Incoming AI Ethics Laws

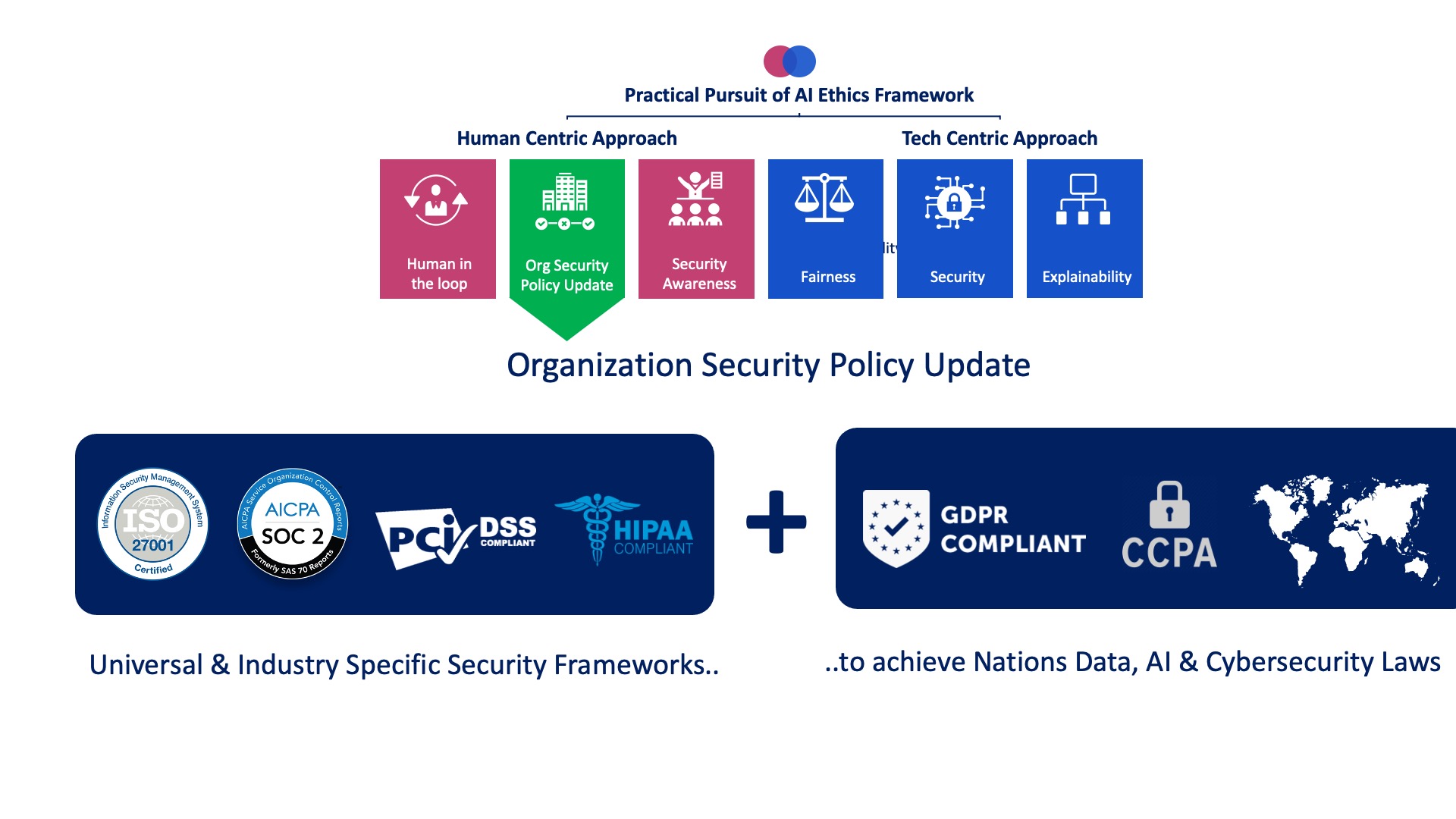

Governments, agencies, and lawmakers have introduced regulations to ensure the reliability of AI systems, and businesses must follow ethical guidelines throughout the AI lifecycle to avoid penalties, including a 6% fine on their global income or potential criminal charges.

The European Union (EU) has implemented the General Data Protection Regulation (GDPR) to safeguard data while proposing a legal framework for AI to address ethical issues. In addition, AI ethics laws have been implemented in many countries to reduce the adverse effects of AI on politics, society, privacy, and community well-being.

Is Your Organization Prepared for AI Law Adoption?

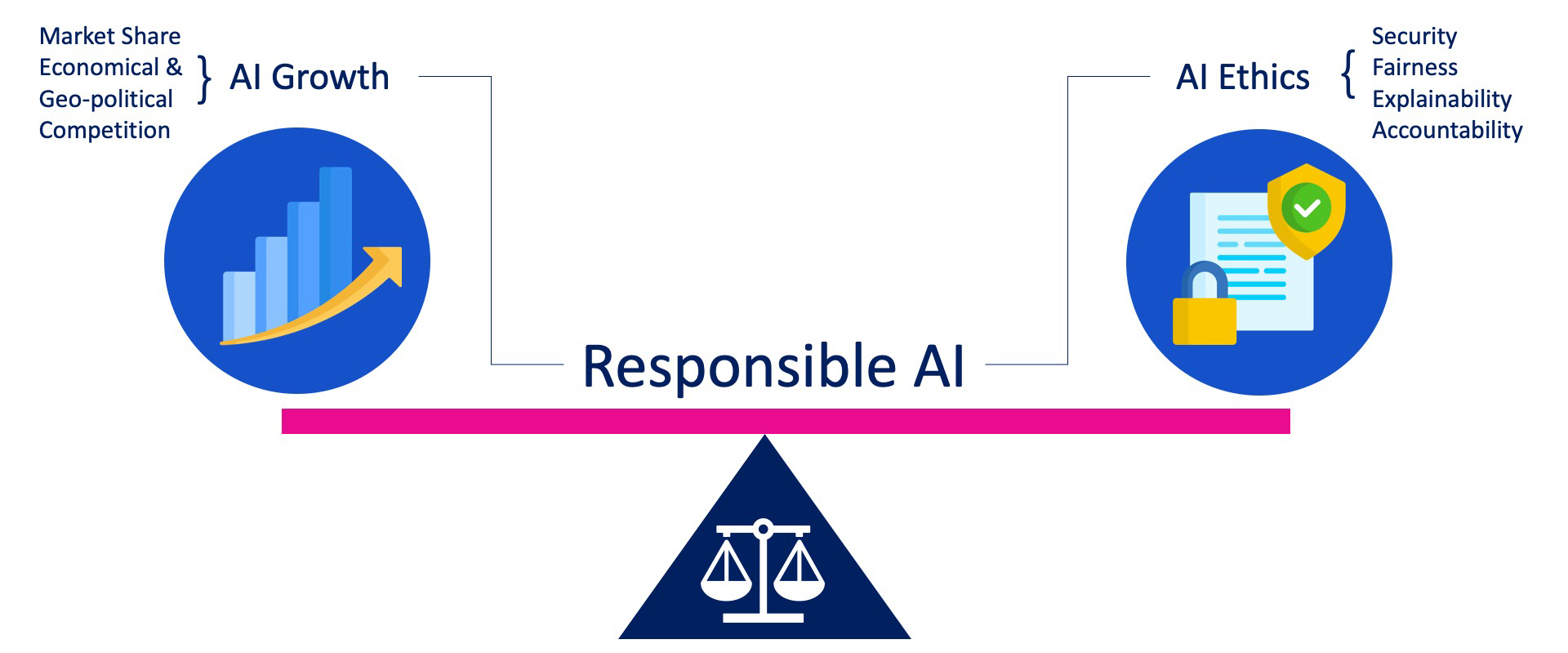

Four crucial principles of AI ethics are becoming increasingly important globally: security, fairness, explainability, and accountability. It is essential that all parties involved in the development of AI solutions, including AI companies, developers, deployers, project managers, and business owners, fully understand and implement these principles at every stage to ensure that the solutions are trustworthy and transparent.

Ensuring fairness, security, explainability, and accountability are all critical factors in designing and implementing AI systems. Fairness can be achieved by removing bias and maintaining fairness in the datasets and design. Security measures, such as encrypting data and protecting secret keys, are crucial for safeguarding AI systems from data evasion, data poisoning, inference, and extraction. Offering information about data, models, model cards, and algorithms can make AI systems more understandable to those not technically knowledgeable. Additionally, AI systems should be audited and held accountable for negative impacts on society and ethics using the loop approach and immutable log audit mechanism, which can quickly identify underlying causes of any problems that may arise.

Non-Compliance Costs

The EU’s AI Act introduced penalties for non-compliance, including significant fines of up to thirty million euros for violating prohibitions related to AI systems. Small to medium-sized enterprises and startups face lower fines, but providing false information during AI audits can lead to criminal charges and penalties of up to ten million euros or 2% of revenue.

If a company fails to comply with the prohibitions, Article 71(3) states that it may face a penalty of up to thirty million euros or 6% of its turnover. In addition, Article 71(4) specifies that failure to meet obligations may result in a penalty of up to twenty million euros or 4% of the company’s turnover. Furthermore, providing false or incomplete information can lead to a fine of up to ten million euros or 2% of the company’s turnover, as stated in Article 71(5).

The Solution

Practical Application Framework for AI Ethics

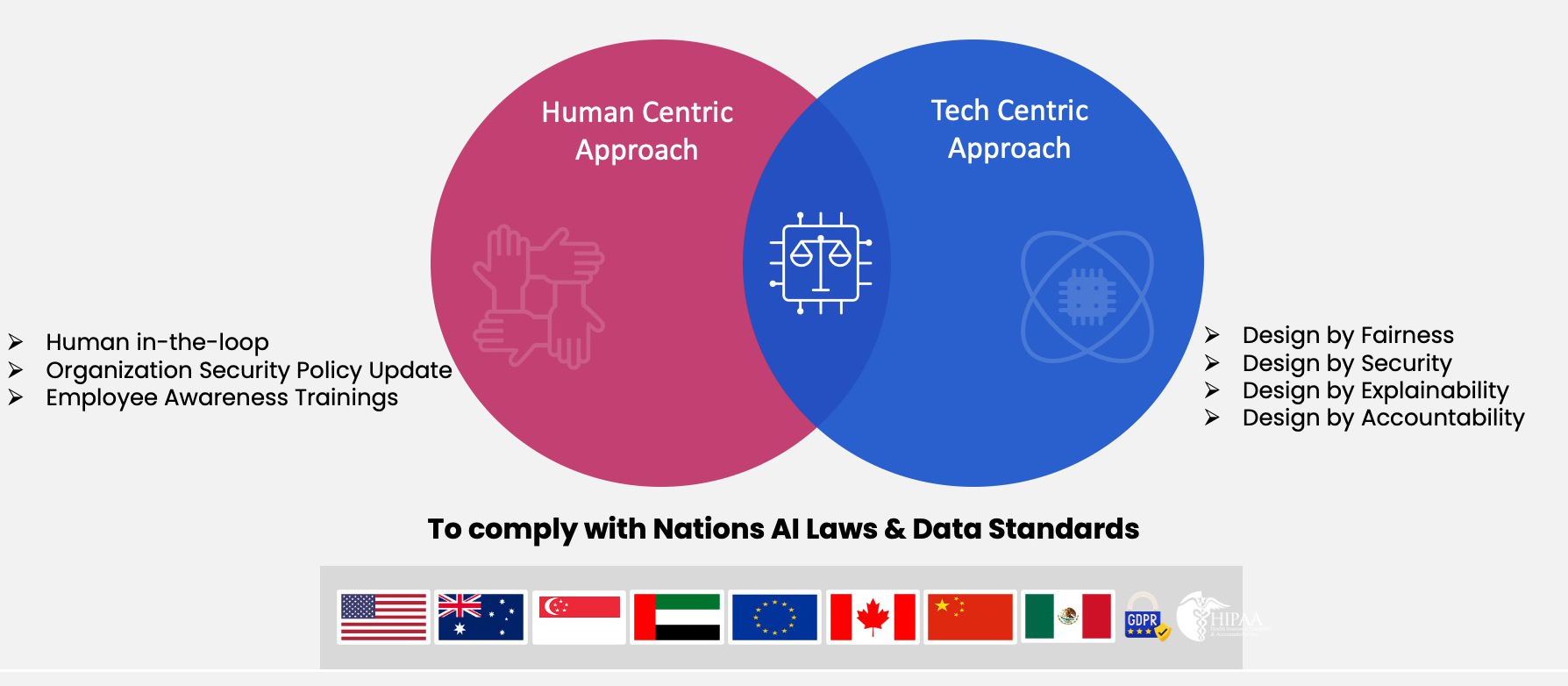

Successfully implementing AI solutions involves more than just theoretical knowledge. During the development process, our team researched and analyzed the top AI regulations and policies in high-risk industries such as healthcare, finance, and defense and developed a practical application framework for AI ethics that considers both human and technological factors.

Despite modern technologies’ ability to expedite AI advancements, humans must address ethical concerns that arise consequently. Incorporating human-centered and technology-centered approaches into an AI ethics framework can enhance security, fairness, explanation, and accountability while reducing legal and ethical risks.

Human-Centric Approach

To prevent AI from posing a threat to society, it is crucial to prioritize the needs of people, seek feedback, assess its impact, strengthen security protocols, and provide ongoing training to national policymakers.

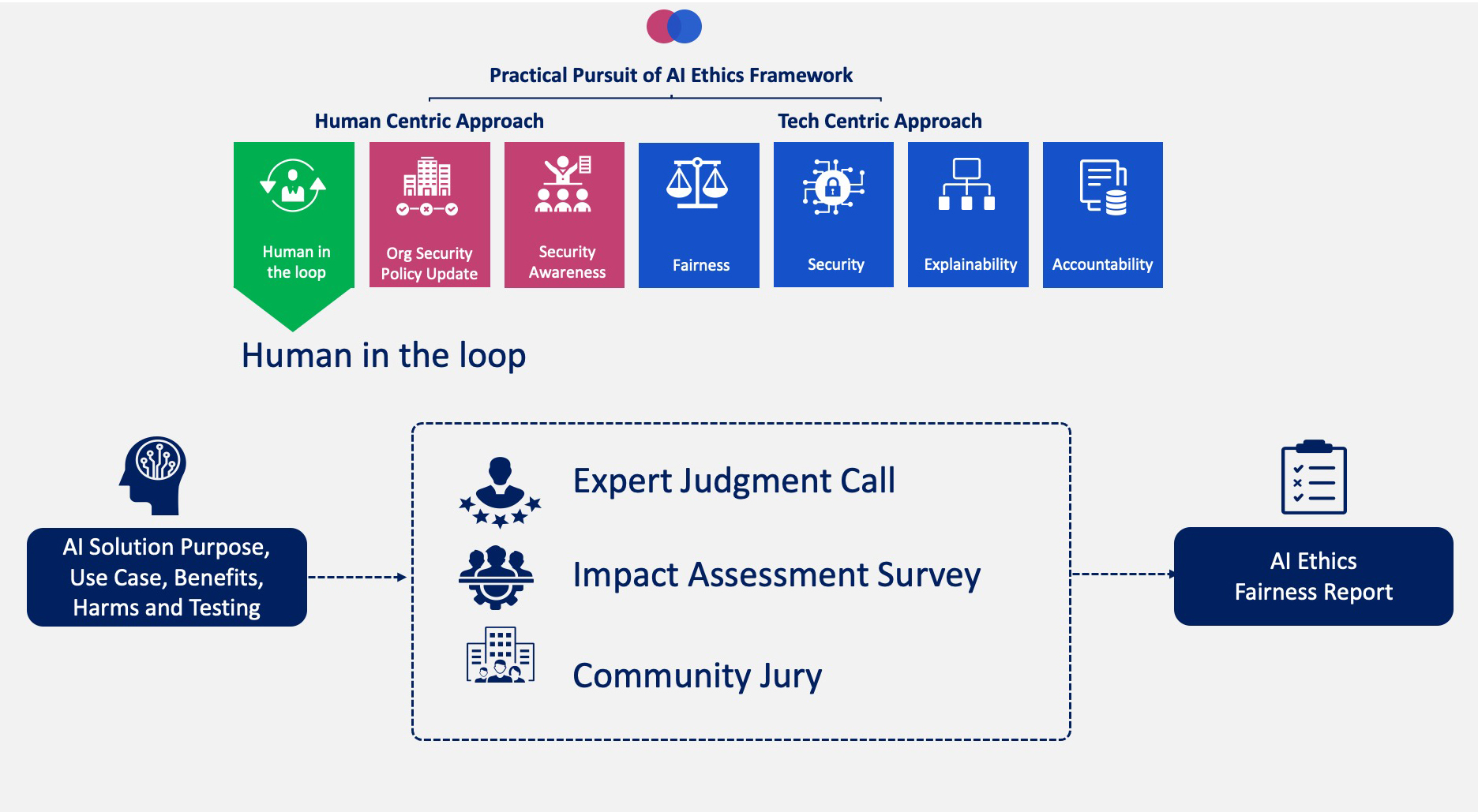

Human in the Loop

Using the human-in-the-loop approach is crucial for evaluating the impact of AI on citizens, communities, and customers. It involves cross-functional resources to ensure positive effects and fair development.

To ensure the transparency and accuracy of AI products, involving experts in evaluating various aspects such as data collection, model training, security protocols, and real-world applications is crucial. Conducting an impact assessment survey and collaborating with executives, AI product managers, and development teams can help identify potential risks to stakeholders and take necessary actions. Impartiality can be ensured by including a community jury from diverse backgrounds who have participated in evaluation sessions with AI developers to examine the product’s impact, benefits, and drawbacks.

Updating Organization Security Policies

HIPAA regulations require companies to understand patient behavior and follow security standards, such as HITRUST CSF, to avoid fines.

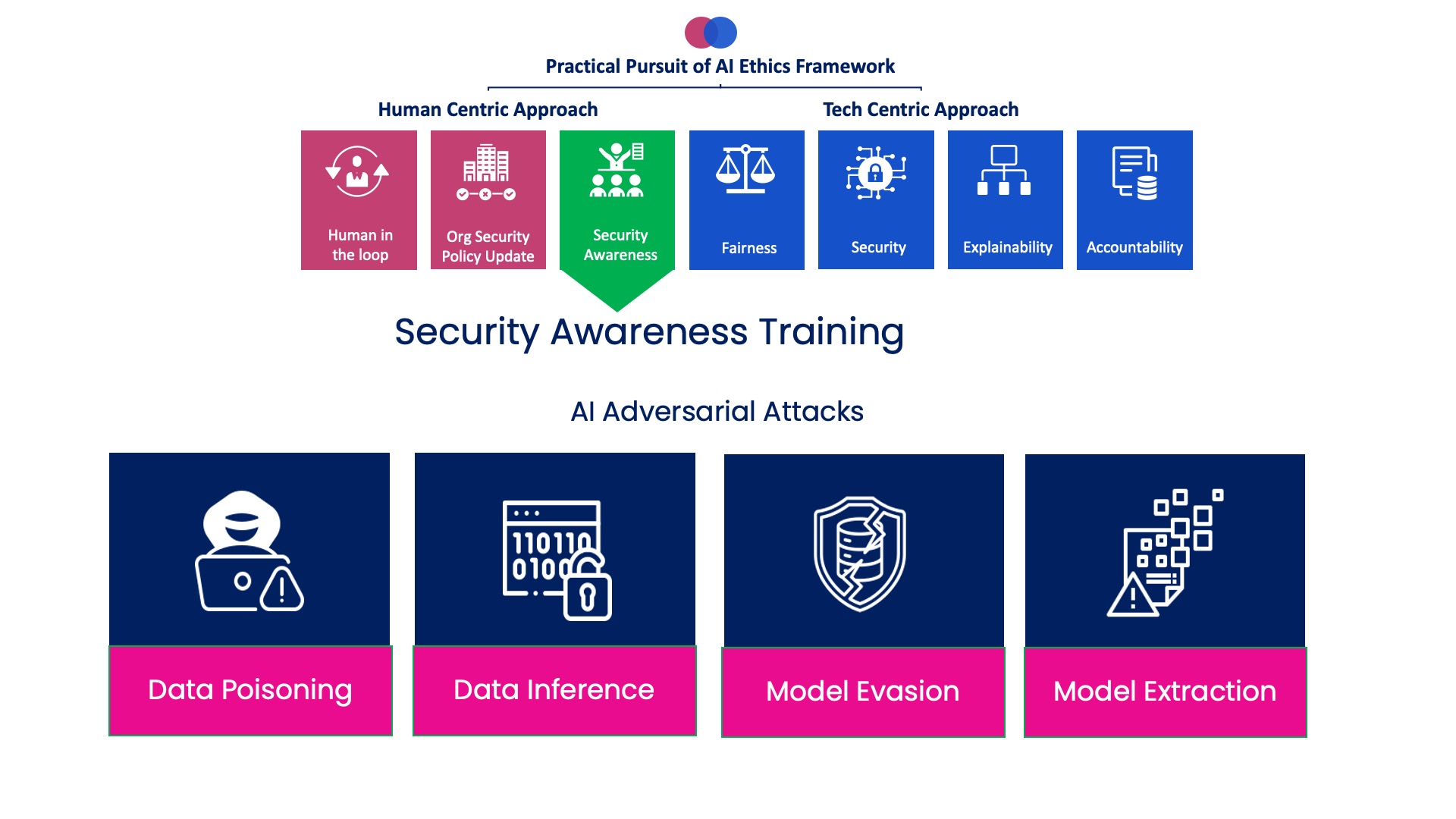

Security Awareness Training for AI Building

The security of modern organizations is not just the responsibility of one team; it is a shared responsibility, which means that all employees must remain informed of the latest cyber threats and take the necessary measures to protect themselves. Cybersecurity professionals have alerted AI developers of potential adversarial attacks in 2022, such as data poisoning, data inference, model evasion, model extraction, and smart phishing.

AI teams must have the right tools and knowledge to manage adversarial attacks to ensure safety and reliability in high-risk scenarios. Implementing advanced defense mechanisms such as randomizing inputs, analyzing error patterns, and modifying input features is crucial in achieving this goal.

Technology-Centric Approach

To ensure ethical AI, companies should prioritize human values and avoid issues such as bias, safety, and privacy by using appropriate tools and strategies. Open-source platforms, data monitoring tools, and black-box algorithm interpreters can help achieve fairness, security, interpretability, and responsibility.

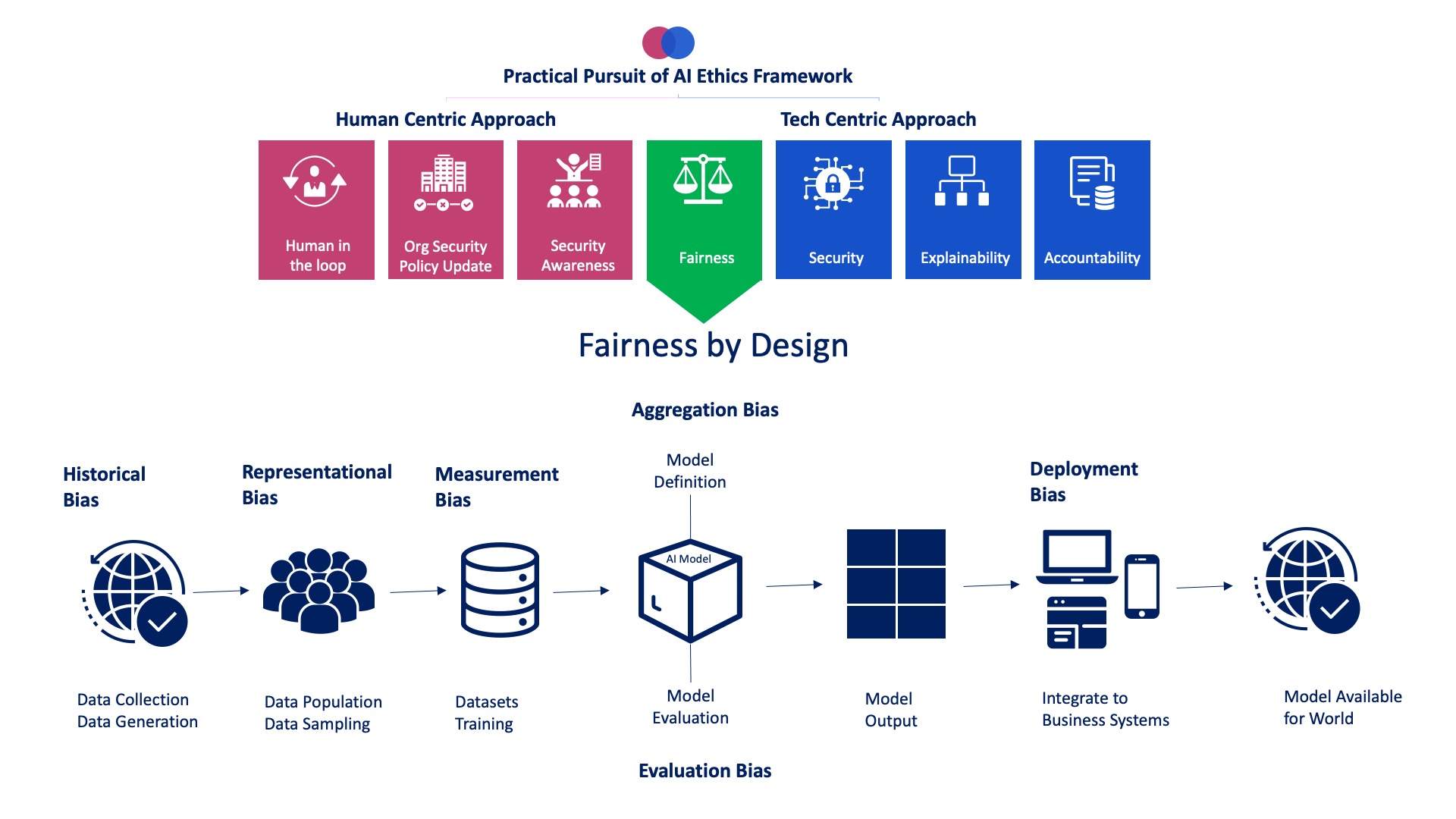

Fairness by Design

AI developers should follow several best practices to ensure fairness and prevent biased results. The most important is to use relevant and accurate data sourced with integrity. Developers should also make defensible correlations and inferences when creating their AI models and strive for a statistical balance between true and false positives. Lastly, it is crucial to train models without bias to achieve a fair implementation.

Historical bias can be attributed to past circumstances that have resulted in job titles such as “engineer” and “nurse” being associated with gendered language. To ensure fairness and accuracy in dataset development and improvement, it is essential to consider global and social inequality, ethnic minorities, and cultural sensitivities.

When examining data collected from a population, ensure that the sample data represents all population segments. If conclusions are drawn from a small subset of data, they may be inaccurate. For example, the ImageNet dataset consists of 1.2 million images from various geographical regions. However, only 1% of the images were captured in China, 2.1% in India, and 45% in the United States.

Measurement bias should be considered when selecting, gathering, or calculating features or labels for a model. For instance, a pretrial bail release model, which predicts the likelihood of recommitting crime, has allegedly exhibited biased outcomes for minority neighborhoods that are heavily policed and a greater false positive rate for Black defendants due to the “arrest” variable being used to measure the act of “recommitting crime.”

Aggregate bias can occur when a single model is utilized to analyze different data subsets without considering their unique characteristics. When conducting natural language processing (NLP) on Twitter data, it is crucial to consider emojis, hashtags, words, and phrases used, as they can significantly affect the interpretation of the data. Neglecting to consider regional or group-specific context and applying a generic NLP model to social media data can lead to incorrect classifications and unreliable conclusions.

Evaluation bias is exhibited when a model is trained on benchmark data that does not accurately reflect the intended population. If a model performs well only on a subset of the benchmark data, it does not accurately represent the population, resulting in misrepresentation. For example, a gender classification AI tool developed for commercial use failed in the real world due to the disproportionate number of lighter-skinned subjects in the IARPA Janus Benchmark A and audience benchmark datasets.

Deployment bias can occur when an AI model is utilized to address one issue, but the user is attempting to solve a different problem. For example, tools that determine pretrial bail judgment and sentence length may exhibit bias, resulting in an increased sentence for someone.

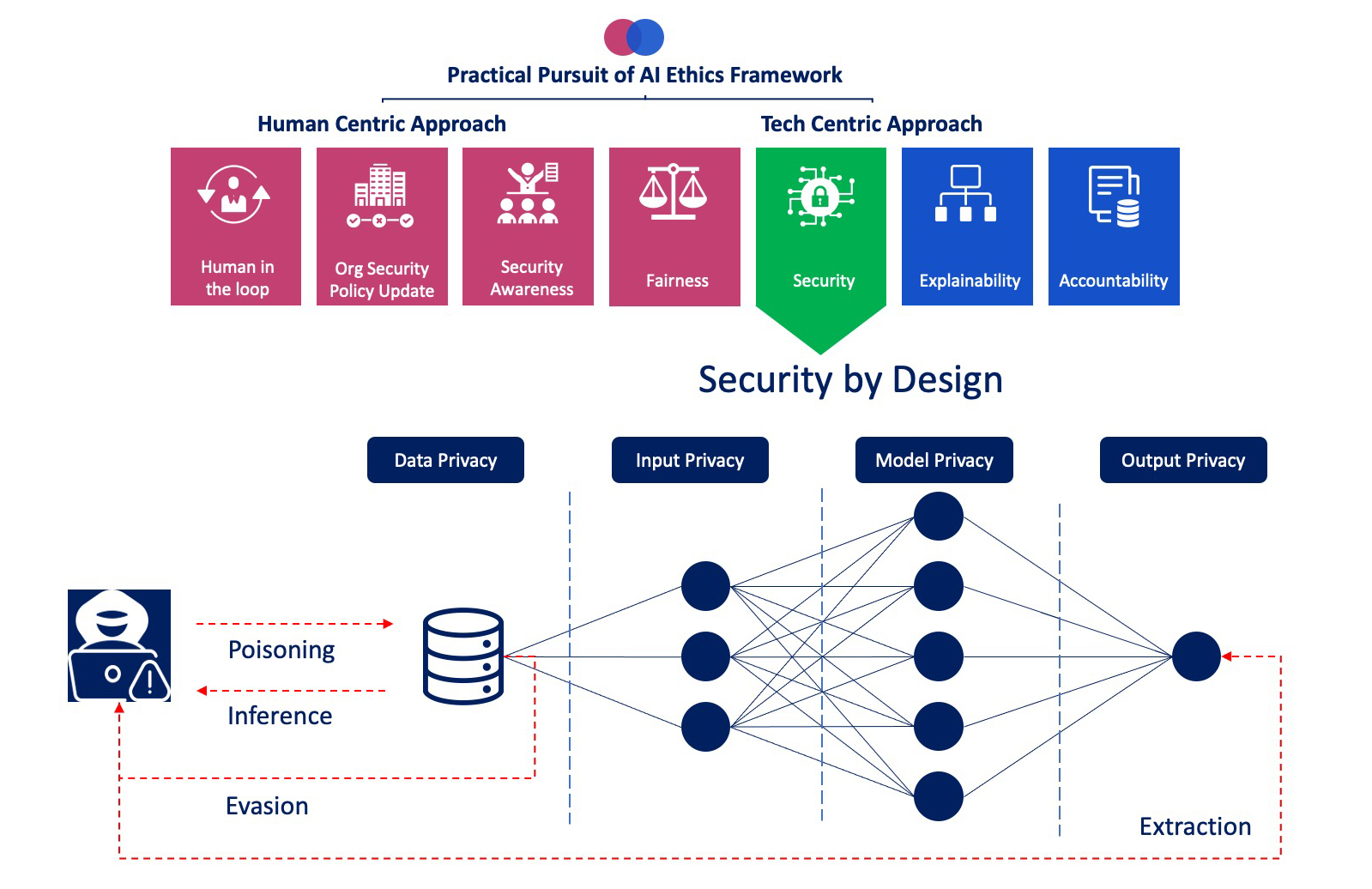

Security by Design

Data privacy is crucial to prevent harmful entities from using data inference and poisoning methods to reverse engineer the training data. Ensuring input privacy prevents anyone, including the model creator, from observing or modifying the input data using evasion techniques. Only authorized users should have access to maintain the model’s safety, and any unauthorized attempts to extract data should be blocked. Additionally, preserving model privacy is essential in preventing unauthorized parties from accessing or stealing the model.

Measures for satisfying the four pillars of security by design.

To ensure the safety of your data, several measures can be taken. For vulnerable components, you can use secure virtual private networks, web filtering, patch management, identity and access management, and data encryption. When transferring data, it is essential to use secure key management, encryption, and automatic detection of any unintended access. Storage can be protected with multi-factor authentication (MFA), deleting functions, immutable logs, versioning, 24/7 monitoring, policies, and alerts. Homomorphic encryption can protect sensitive data while training machine learning models, and Google’s Secure Federated Learning allows for collective training without sharing data. Finally, Secure Multi-Party Computation enables decentralized training and allows multiple parties to read different data sets simultaneously for extra security.

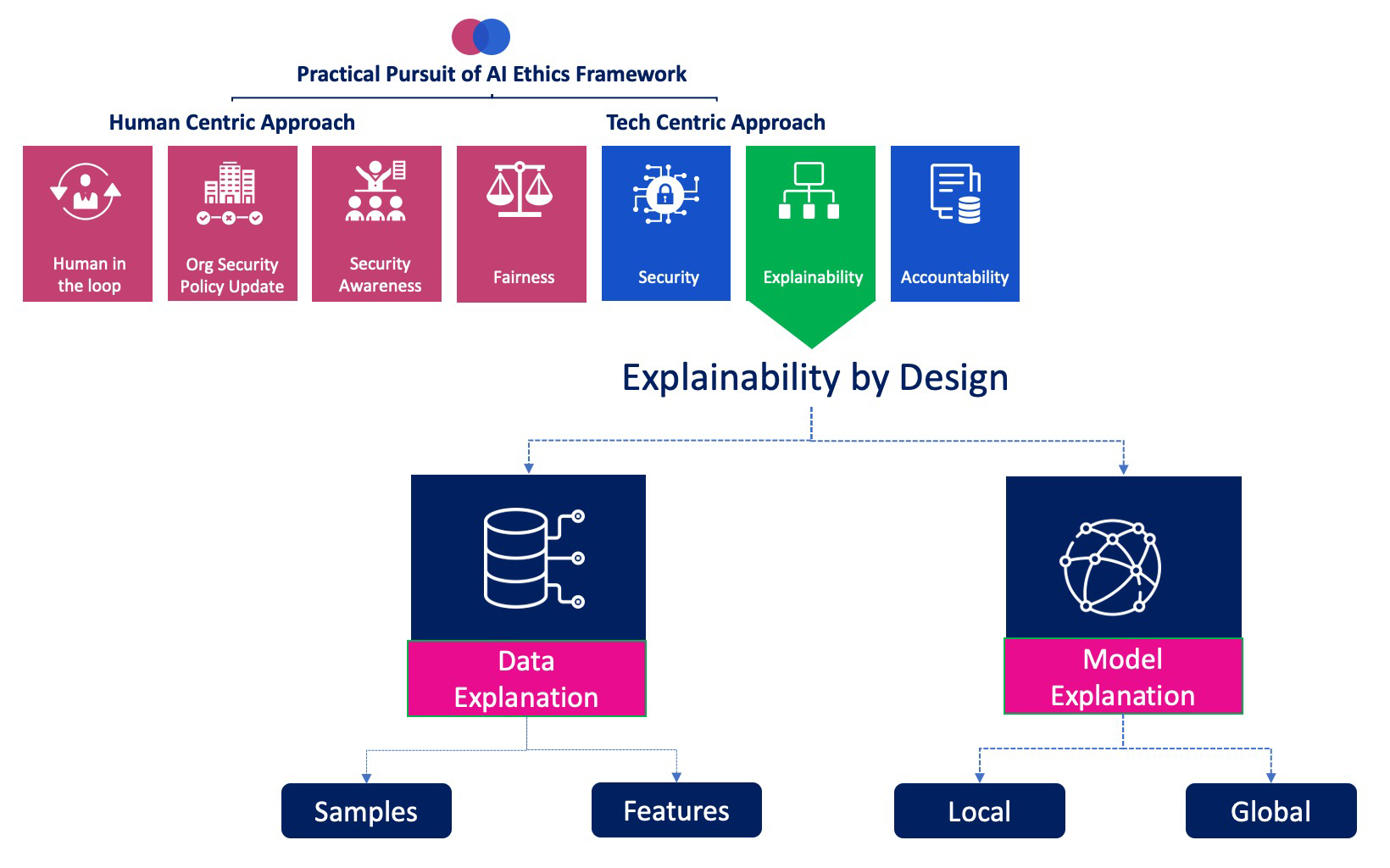

Explainability by Design

When developing ethical AI, transparency and precise model analysis are crucial, and it is vital that everyone involved can comprehend the process. One way to achieve this is by scrutinizing the structure of the model and providing clear explanations.

There are two methods to identify interpretable AI solutions. The first involves analyzing data characteristics, explaining local reasons for rejection, and analyzing the factors that impact model prediction. The second method utilizes model interpretability tools like Microsoft InterpretML and IBM AIX360. These tools can break down black box algorithms and deep neural networks into understandable features, layers, and parameters.

It is crucial to define the data and model accurately to create interpretable AI or simplify complex black box algorithms.

Data Explanation

When building an AI model, it is vital to understand the data and its features. The disentangled inferred prior variational autoencoder (DIP-VAE) algorithm simplifies feature sets, and ProtoDash helps extract subset samples.

Model Explanation

There are two types of AI models: white box and black box. White box models, such as decision trees, Boolean rule sets, and generalized additive models, are easy to comprehend for those who excel in logical thinking. However, black box models require an explanation model or interpretation tools to be effectively used.

Global and local explanations are the two primary methods for analyzing AI models. A global explanation helps identify noteworthy features and variables in the model output, while a local explanation provides a breakdown of individual predictions. Several libraries are available for analyzing datasets and interpreting AI models, and a Python package on GitHub is known for unpacking data dimensions and building metric-based models.

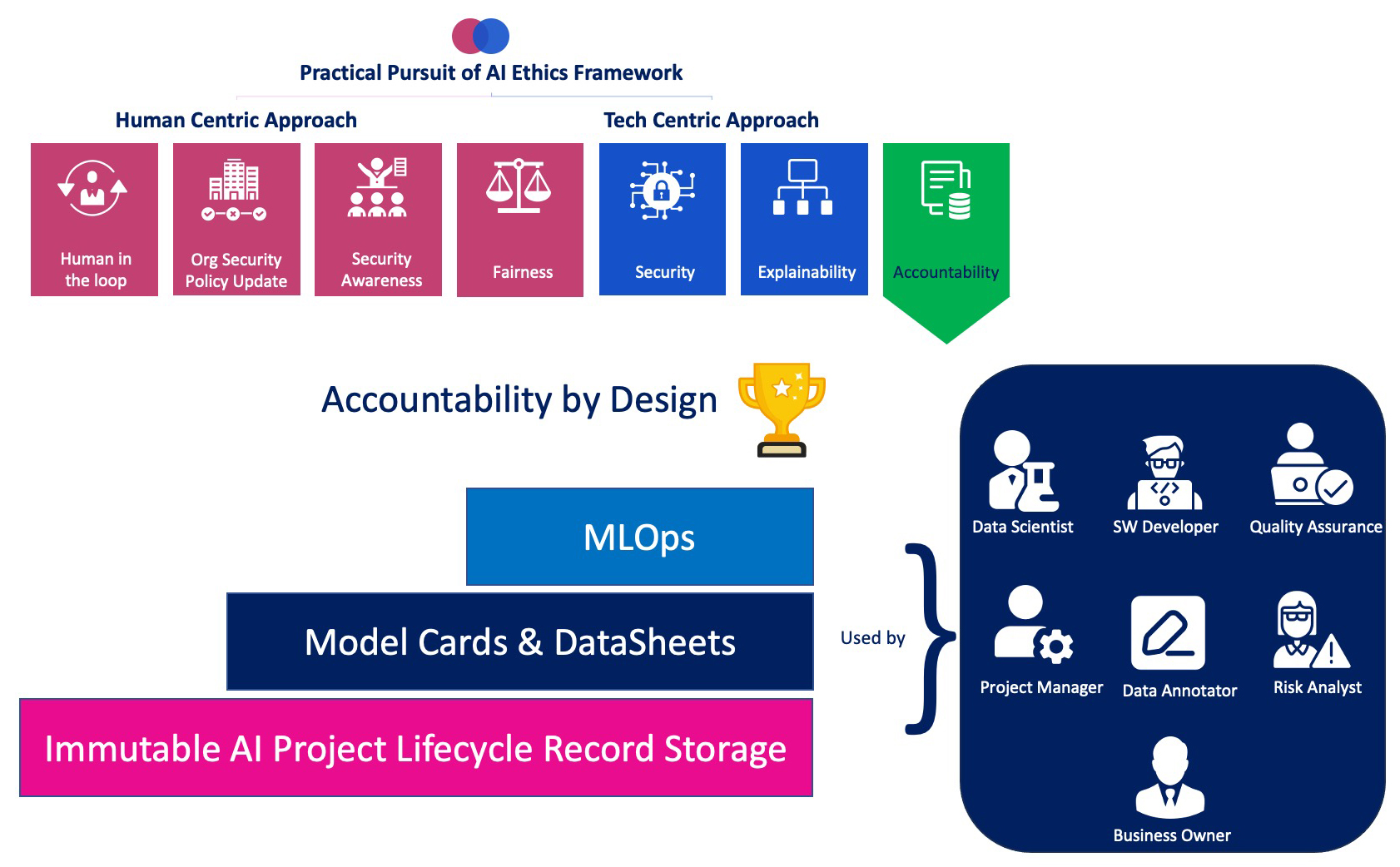

Accountability by Design

A permanent data repository should document various aspects of AI governance, including scope, collection methods, team details, testing results, and legal documents.

Datasheets for Datasets

Microsoft has recently developed a handy tool that assists in documenting datasets for training AI models. This dataset documentation tool, akin to model cards, comprises thirty-one essential questions encompassing various aspects such as data collection, labeling, preprocessing, distribution, and maintenance. Its fundamental objective is to deliver precise and succinct information relating to the dataset, which users can easily comprehend.

(Machine Learning Operations) MLOps Tools

Regarding AI development, testing, deployment, and monitoring, MLOps teams can achieve successful collaboration using DevOps methodologies. By leveraging MLOps tools, teams can effectively track experiments, orchestrate workflows, control versions, and monitor models. This streamlined approach results in faster deployment, reduced risk, and continuous monitoring, all critical components of successful AI implementation.

Conclusion

Prioritizing Responsible AI

The possibilities for AI advancement are enormous; however, it is essential to prioritize ethical concerns such as fairness, security, explainability, and accountability to prevent any adverse effects on an organization’s image, finances, and legal standing. By embracing a responsible AI strategy, companies can capitalize on the growth of AI technology while promoting ethical behavior that benefits society and their workers, which also helps to build a trustworthy relationship with stakeholders.

Bibliography

- “Ethics of Artificial Intelligence.” Ethics of Artificial Intelligence | UNESCO, 1 Jan. 2022, unesco.org/en/artificial-intelligence/recommendation-ethics Accessed 20 Dec. 2022.

- “Top 9 Ethical Issues in Artificial Intelligence.” World Economic Forum, weforum.org/agenda/2016/10/top-10-ethical-issues-in-artificial-intelligence Accessed 20 Dec. 2022.

- “AI Gone Wrong Examples…” Analytics Insight…, analyticsinsight.net/famous-ai-gone-wrong-examples-in-the-real-world-we-need-to-know Accessed 20 Dec. 2022

- “57+ Amazing Artificial Intelligence Statistics (2023).” Exploding Topics, 17 Aug. 2021, explodingtopics.com/blog/ai-statistics Accessed 20 Dec. 2022.

- Loukides, Mike. “AI Adoption in the Enterprise 2022.” O’Reilly Media, 31 Mar. 2022, oreilly.com/radar/ai-adoption-in-the-enterprise-2022 Accessed 20 Dec. 2022.

- “Ethics Guidelines for Trustworthy AI – FUTURIUM – European Commission.” FUTURIUM – European Commission, 17 Dec. 2018, ec.europa.eu/futurium/en/ai-alliance-consultation Accessed 25 Dec. 2022.

- “OECD’s Live Repository of AI Strategies and Policies – OECD.AI.” OECD’s Live Repository of AI Strategies & Policies – OECD.AI, oecd.ai/en/dashboards/overview Accessed 25 Dec. 2022.

- “Australia’s AI Ethics Principles.” Australia’s AI Ethics Principles | Australia’s Artificial Intelligence Ethics Framework | Department of Industry, Science and Resources, industry.gov.au/publications/australias-artificial-intelligence-ethics-framework/australias-ai-ethics-principles Accessed 25 Dec. 2022.

- “Penalties of the EU AI Act: The High Cost of Non-Compliance.” Penalties of the EU AI Act: The High Cost of Non-Compliance, 24 Nov. 2022, holisticai.com/blog/penalties-of-the-eu-ai-act Accessed 25 Dec. 2022.

- “9 Ethical AI Principles for Organizations to Follow.” World Economic Forum, weforum.org/agenda/2021/06/ethical-principles-for-ai Accessed 25 Dec. 2022.

- Aggarwal, Gaurav. “Council Post: How Humans-In-The-Loop AI Can Help Solve the Data Problem.” Forbes, 27 Oct. 2021, forbes.com/sites/forbestechcouncil/2021/10/27/how-humans-in-the-loop-ai-can-help-solve-the-data-problem Accessed 25 Dec. 2022.

- “AI Fairness Is Not Just an Ethical Issue.” Harvard Business Review, 20 Oct. 2020, hbr.org/2020/10/ai-fairness-isnt-just-an-ethical-issue Accessed 25 Dec. 2022.

- Thaine, Patricia. “Perfectly Privacy-Preserving AI.” Medium, 8 Oct. 2020, towardsdatascience.com/perfectly-privacy-preserving-ai-c14698f322f5 Accessed 1 Jan. 2023.

- Kaissis, Georgios A., et al. “Secure, Privacy-preserving and Federated Machine Learning in Medical Imaging – Nature Machine Intelligence.” Nature, 8 June 2020, nature.com/articles/s42256-020-0186-1 Accessed 1 Jan. 2023.

- “InterpretML.” InterpretML, interpretml.github.io Accessed 5 Jan. 2023

- Trusted-AI. “GitHub – Trusted-AI/AIX360: Interpretability and Explainability of Data and Machine Learning Models.” GitHub, 3 Nov. 2022, github.com/Trusted-AI/AIX360 Accessed 5 Jan. 2023

- Trusted-AI. “AIX360/dipvae.py at Master · Trusted-AI/AIX360.” GitHub, github.com/Trusted-AI/AIX360 Accessed 5 Jan. 2023.

- Trusted-AI. “AIX360/PDASH.py at Master · Trusted-AI/AIX360.” GitHub, github.com/Trusted-AI/AIX360 Accessed 5 Jan. 2023.

- Gebru, Timnit, et al. “Datasheets for Datasets.” org, 23 Mar. 2018, www.arxiv.org/abs/1803.09010v8 Accessed 5 Jan. 2023

- “ml-ops.org.” ML Ops: Machine Learning Operations, ml-ops.org Accessed 5 Jan. 2023.